Process Analytics & Process Mining with pm4py ~ Case study of an 'Order to Cash' process

I have a look at the pm4py python package of Fraunhofer Institute, which analog to R’s bupaR package is able to mine and analyse processes through its log data.

Analysing event data is an iterative process of three steps: extraction, processing and analysis.

There are three perspectives on a process and its analytics:

- organizational perspective (focus on the actors)

- control-flow perspective (focus on the flow and structuredness of the process)

- performance perspective (focusses on time and efficiency)

Reminder: Each unique sequence of activities is called a trace or process variant.

import os

os.environ["PATH"] += os.pathsep + 'C://Program Files//Graphviz//bin'

import pandas as pd

import numpy as np

from datetime import date

from IPython.display import Markdown, display

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_style('darkgrid')

import pm4py

import pyreadr

print('\n'.join(f'{m.__name__}=={m.__version__}' for m in globals().values() if getattr(m, '__version__', None)))

pandas==1.3.4

numpy==1.21.5

seaborn==0.11.2

pm4py==2.2.19.2

pyreadr==0.4.4

import sys

print('current env:' , sys.exec_prefix.split(os.sep)[-1] )

current env: pm4py

We have got log data files for an order-to-cash process that we analyse end-to-end.

At first we transform data from various sources to an common event log.

After that we get a full overview picture of the process, explore the dimensions of the data and its different activities, stages and flows in the process.

Finally, we apply analysis tools to formulate an answer to a few questions of interest.

Quotations Data (1/3)

It starts with preprocessing our process data from three log data sources. With some ETL and data wrangling we combine them finally in one large datafram.

quotations = pyreadr.read_r('otc_quotations.RDS')

quotations = quotations[None]

quotations.head()

| quotation_id | cancelled_at | cancelled_by | manufactContacted_at | manufactContacted_by | received_at | received_by | reminded_at | reminded_by | send_at | send_by | supplierContacted_at | supplierContacted_by | supplierOfferReceived_at | supplierOfferReceived_by | warehouseContacted_at | warehouseContacted_by | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | quo-1003 | 2017-05-22 13:28:04 | Katherine | 2017-04-22 17:58:11 | Kimberly | 2017-04-16 20:34:12 | Andrea | 2017-05-14 19:06:41 | Jonathan | 2017-05-08 14:20:30 | Katherine | 2017-04-29 13:43:18 | Matthew | 2017-05-03 19:09:21 | Matthew | 2017-04-24 19:36:10 | Kimberly |

| 1 | quo-1004 | NaN | NaN | 2017-06-18 13:47:50 | Kimberly | 2017-06-09 11:19:31 | Jonathan | NaN | NaN | 2017-07-02 18:50:58 | Andrea | 2017-06-20 12:19:31 | Kimberly | 2017-06-23 19:33:10 | Kimberly | 2017-06-15 19:30:07 | Kimberly |

| 2 | quo-1006 | NaN | NaN | 2017-10-28 13:55:51 | Kimberly | 2017-10-14 18:55:47 | Jonathan | NaN | NaN | 2017-11-09 11:27:11 | Andrea | 2017-10-26 18:06:29 | Kimberly | 2017-10-30 10:36:44 | Matthew | 2017-10-22 17:57:26 | Kimberly |

| 3 | quo-1008 | NaN | NaN | NaN | NaN | 2017-09-08 13:29:05 | Jonathan | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| 4 | quo-1010 | 2017-10-08 14:35:20 | Jonathan | NaN | NaN | 2017-09-03 15:52:35 | Jonathan | 2017-10-02 16:25:02 | Jonathan | 2017-09-25 16:56:13 | Andrea | 2017-09-10 16:07:59 | Kimberly | 2017-09-14 14:37:03 | Matthew | 2017-09-16 17:14:54 | Matthew |

# Inspect quotations

quotations.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 1833 entries, 0 to 1832

Data columns (total 17 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 quotation_id 1833 non-null object

1 cancelled_at 255 non-null object

2 cancelled_by 255 non-null category

3 manufactContacted_at 743 non-null object

4 manufactContacted_by 743 non-null category

5 received_at 1833 non-null object

6 received_by 1833 non-null category

7 reminded_at 504 non-null object

8 reminded_by 504 non-null category

9 send_at 1461 non-null object

10 send_by 1461 non-null category

11 supplierContacted_at 1099 non-null object

12 supplierContacted_by 1099 non-null category

13 supplierOfferReceived_at 1099 non-null object

14 supplierOfferReceived_by 1099 non-null category

15 warehouseContacted_at 741 non-null object

16 warehouseContacted_by 741 non-null category

dtypes: category(8), object(9)

memory usage: 144.3+ KB

offer_history = quotations.melt(id_vars='quotation_id')

offer_history

| quotation_id | variable | value | |

|---|---|---|---|

| 0 | quo-1003 | cancelled_at | 2017-05-22 13:28:04 |

| 1 | quo-1004 | cancelled_at | NaN |

| 2 | quo-1006 | cancelled_at | NaN |

| 3 | quo-1008 | cancelled_at | NaN |

| 4 | quo-1010 | cancelled_at | 2017-10-08 14:35:20 |

| ... | ... | ... | ... |

| 29323 | quo-985 | warehouseContacted_by | Kimberly |

| 29324 | quo-986 | warehouseContacted_by | Matthew |

| 29325 | quo-987 | warehouseContacted_by | Matthew |

| 29326 | quo-994 | warehouseContacted_by | NaN |

| 29327 | quo-996 | warehouseContacted_by | NaN |

29328 rows × 3 columns

offer_history[['activity','info']] = offer_history.variable.str.split('_', expand=True)

offer_history.drop('variable', axis=1, inplace=True)

offer_history = offer_history[['quotation_id', 'activity', 'info', 'value']]

offer_history

| quotation_id | activity | info | value | |

|---|---|---|---|---|

| 0 | quo-1003 | cancelled | at | 2017-05-22 13:28:04 |

| 1 | quo-1004 | cancelled | at | NaN |

| 2 | quo-1006 | cancelled | at | NaN |

| 3 | quo-1008 | cancelled | at | NaN |

| 4 | quo-1010 | cancelled | at | 2017-10-08 14:35:20 |

| ... | ... | ... | ... | ... |

| 29323 | quo-985 | warehouseContacted | by | Kimberly |

| 29324 | quo-986 | warehouseContacted | by | Matthew |

| 29325 | quo-987 | warehouseContacted | by | Matthew |

| 29326 | quo-994 | warehouseContacted | by | NaN |

| 29327 | quo-996 | warehouseContacted | by | NaN |

29328 rows × 4 columns

recode = {"info": {"at": "timestamp", "by": "resource"}}

offer_history_recode = offer_history.replace(recode)

offer_history_recode['info'] = offer_history_recode['info'].astype('category')

offer_history_recode

| quotation_id | activity | info | value | |

|---|---|---|---|---|

| 0 | quo-1003 | cancelled | timestamp | 2017-05-22 13:28:04 |

| 1 | quo-1004 | cancelled | timestamp | NaN |

| 2 | quo-1006 | cancelled | timestamp | NaN |

| 3 | quo-1008 | cancelled | timestamp | NaN |

| 4 | quo-1010 | cancelled | timestamp | 2017-10-08 14:35:20 |

| ... | ... | ... | ... | ... |

| 29323 | quo-985 | warehouseContacted | resource | Kimberly |

| 29324 | quo-986 | warehouseContacted | resource | Matthew |

| 29325 | quo-987 | warehouseContacted | resource | Matthew |

| 29326 | quo-994 | warehouseContacted | resource | NaN |

| 29327 | quo-996 | warehouseContacted | resource | NaN |

29328 rows × 4 columns

offer_history_spread = offer_history_recode.pivot( index=['quotation_id', 'activity'], columns='info', values='value').reset_index()

offer_history_spread

| info | quotation_id | activity | resource | timestamp |

|---|---|---|---|---|

| 0 | quo-1003 | cancelled | Katherine | 2017-05-22 13:28:04 |

| 1 | quo-1003 | manufactContacted | Kimberly | 2017-04-22 17:58:11 |

| 2 | quo-1003 | received | Andrea | 2017-04-16 20:34:12 |

| 3 | quo-1003 | reminded | Jonathan | 2017-05-14 19:06:41 |

| 4 | quo-1003 | send | Katherine | 2017-05-08 14:20:30 |

| ... | ... | ... | ... | ... |

| 14659 | quo-996 | reminded | NaN | NaN |

| 14660 | quo-996 | send | Andrea | 2017-01-30 18:53:32 |

| 14661 | quo-996 | supplierContacted | NaN | NaN |

| 14662 | quo-996 | supplierOfferReceived | NaN | NaN |

| 14663 | quo-996 | warehouseContacted | NaN | NaN |

14664 rows × 4 columns

Validations Data (2/3)

validations = pyreadr.read_r('otc_validations.RDS')

validations = validations[None]

validations

| quotation_id | resource | started | completed | |

|---|---|---|---|---|

| 0 | quo-1003 | Jonathan | 2017-04-17 14:59:08 | 2017-04-19 18:32:57 |

| 1 | quo-1004 | Andrea | 2017-06-11 13:10:45 | 2017-06-13 12:18:57 |

| 2 | quo-1006 | Katherine | 2017-10-16 15:59:18 | 2017-10-18 16:21:56 |

| 3 | quo-1008 | Andrea | 2017-09-09 17:58:39 | 2017-09-12 20:58:14 |

| 4 | quo-1010 | Andrea | 2017-09-05 17:40:32 | 2017-09-08 15:48:41 |

| ... | ... | ... | ... | ... |

| 1828 | quo-985 | Andrea | 2017-03-29 19:15:36 | 2017-04-01 14:33:23 |

| 1829 | quo-986 | Katherine | 2017-10-23 11:28:02 | 2017-10-26 14:49:49 |

| 1830 | quo-987 | Katherine | 2017-02-14 16:27:52 | 2017-02-17 11:33:17 |

| 1831 | quo-994 | Andrea | 2017-09-12 18:37:27 | 2017-09-13 15:25:31 |

| 1832 | quo-996 | Katherine | 2017-01-17 18:16:26 | 2017-01-18 18:42:02 |

1833 rows × 4 columns

validate_history = validations

validate_history['activity'] = "Validate"

validate_history['action'] = validate_history.quotation_id + '-validate'

validate_history

| quotation_id | resource | started | completed | activity | action | |

|---|---|---|---|---|---|---|

| 0 | quo-1003 | Jonathan | 2017-04-17 14:59:08 | 2017-04-19 18:32:57 | Validate | quo-1003-validate |

| 1 | quo-1004 | Andrea | 2017-06-11 13:10:45 | 2017-06-13 12:18:57 | Validate | quo-1004-validate |

| 2 | quo-1006 | Katherine | 2017-10-16 15:59:18 | 2017-10-18 16:21:56 | Validate | quo-1006-validate |

| 3 | quo-1008 | Andrea | 2017-09-09 17:58:39 | 2017-09-12 20:58:14 | Validate | quo-1008-validate |

| 4 | quo-1010 | Andrea | 2017-09-05 17:40:32 | 2017-09-08 15:48:41 | Validate | quo-1010-validate |

| ... | ... | ... | ... | ... | ... | ... |

| 1828 | quo-985 | Andrea | 2017-03-29 19:15:36 | 2017-04-01 14:33:23 | Validate | quo-985-validate |

| 1829 | quo-986 | Katherine | 2017-10-23 11:28:02 | 2017-10-26 14:49:49 | Validate | quo-986-validate |

| 1830 | quo-987 | Katherine | 2017-02-14 16:27:52 | 2017-02-17 11:33:17 | Validate | quo-987-validate |

| 1831 | quo-994 | Andrea | 2017-09-12 18:37:27 | 2017-09-13 15:25:31 | Validate | quo-994-validate |

| 1832 | quo-996 | Katherine | 2017-01-17 18:16:26 | 2017-01-18 18:42:02 | Validate | quo-996-validate |

1833 rows × 6 columns

validate_history_gathered = validate_history.melt(id_vars=['quotation_id','resource', 'activity','action'],

var_name='lifecycle', value_name='timestamp'

)

validate_history_gathered

| quotation_id | resource | activity | action | lifecycle | timestamp | |

|---|---|---|---|---|---|---|

| 0 | quo-1003 | Jonathan | Validate | quo-1003-validate | started | 2017-04-17 14:59:08 |

| 1 | quo-1004 | Andrea | Validate | quo-1004-validate | started | 2017-06-11 13:10:45 |

| 2 | quo-1006 | Katherine | Validate | quo-1006-validate | started | 2017-10-16 15:59:18 |

| 3 | quo-1008 | Andrea | Validate | quo-1008-validate | started | 2017-09-09 17:58:39 |

| 4 | quo-1010 | Andrea | Validate | quo-1010-validate | started | 2017-09-05 17:40:32 |

| ... | ... | ... | ... | ... | ... | ... |

| 3661 | quo-985 | Andrea | Validate | quo-985-validate | completed | 2017-04-01 14:33:23 |

| 3662 | quo-986 | Katherine | Validate | quo-986-validate | completed | 2017-10-26 14:49:49 |

| 3663 | quo-987 | Katherine | Validate | quo-987-validate | completed | 2017-02-17 11:33:17 |

| 3664 | quo-994 | Andrea | Validate | quo-994-validate | completed | 2017-09-13 15:25:31 |

| 3665 | quo-996 | Katherine | Validate | quo-996-validate | completed | 2017-01-18 18:42:02 |

3666 rows × 6 columns

recode = {"lifecycle": {"started": "start", "completed": "complete"}}

validate_history_recode = validate_history_gathered.replace(recode)

validate_history_recode['lifecycle'] = validate_history_recode['lifecycle'].astype('category')

validate_history_recode

| quotation_id | resource | activity | action | lifecycle | timestamp | |

|---|---|---|---|---|---|---|

| 0 | quo-1003 | Jonathan | Validate | quo-1003-validate | start | 2017-04-17 14:59:08 |

| 1 | quo-1004 | Andrea | Validate | quo-1004-validate | start | 2017-06-11 13:10:45 |

| 2 | quo-1006 | Katherine | Validate | quo-1006-validate | start | 2017-10-16 15:59:18 |

| 3 | quo-1008 | Andrea | Validate | quo-1008-validate | start | 2017-09-09 17:58:39 |

| 4 | quo-1010 | Andrea | Validate | quo-1010-validate | start | 2017-09-05 17:40:32 |

| ... | ... | ... | ... | ... | ... | ... |

| 3661 | quo-985 | Andrea | Validate | quo-985-validate | complete | 2017-04-01 14:33:23 |

| 3662 | quo-986 | Katherine | Validate | quo-986-validate | complete | 2017-10-26 14:49:49 |

| 3663 | quo-987 | Katherine | Validate | quo-987-validate | complete | 2017-02-17 11:33:17 |

| 3664 | quo-994 | Andrea | Validate | quo-994-validate | complete | 2017-09-13 15:25:31 |

| 3665 | quo-996 | Katherine | Validate | quo-996-validate | complete | 2017-01-18 18:42:02 |

3666 rows × 6 columns

bring both dataframes on the same variable names and with identical lifecycle encoding

offer_history_recode = offer_history_spread

offer_history_recode['lifecycle'] = "complete"

offer_history_recode['increment_num'] = range(0+1, len(offer_history_recode.quotation_id)+1)

offer_history_recode['action'] = offer_history_recode.quotation_id.astype(str) + '-' + offer_history_recode.increment_num.astype(str)

offer_history_recode.drop('increment_num', axis=1, inplace=True)

offer_history_recode = offer_history_recode.dropna() # to arrive at 7735 rows - undocumented in case study

# concat quotations + validations histories to sales history

sales_history2 = pd.concat([offer_history_recode,validate_history_recode])

sales_history2

| quotation_id | activity | resource | timestamp | lifecycle | action | |

|---|---|---|---|---|---|---|

| 0 | quo-1003 | cancelled | Katherine | 2017-05-22 13:28:04 | complete | quo-1003-1 |

| 1 | quo-1003 | manufactContacted | Kimberly | 2017-04-22 17:58:11 | complete | quo-1003-2 |

| 2 | quo-1003 | received | Andrea | 2017-04-16 20:34:12 | complete | quo-1003-3 |

| 3 | quo-1003 | reminded | Jonathan | 2017-05-14 19:06:41 | complete | quo-1003-4 |

| 4 | quo-1003 | send | Katherine | 2017-05-08 14:20:30 | complete | quo-1003-5 |

| ... | ... | ... | ... | ... | ... | ... |

| 3661 | quo-985 | Validate | Andrea | 2017-04-01 14:33:23 | complete | quo-985-validate |

| 3662 | quo-986 | Validate | Katherine | 2017-10-26 14:49:49 | complete | quo-986-validate |

| 3663 | quo-987 | Validate | Katherine | 2017-02-17 11:33:17 | complete | quo-987-validate |

| 3664 | quo-994 | Validate | Andrea | 2017-09-13 15:25:31 | complete | quo-994-validate |

| 3665 | quo-996 | Validate | Katherine | 2017-01-18 18:42:02 | complete | quo-996-validate |

11401 rows × 6 columns

Sales Data (3/3)

sales_history = pyreadr.read_r('otc_sales_history.RDS')

sales_history = sales_history[None]

sales_history

| quotation_id | resource | activity | action | lifecycle | timestamp | sales_order_id | |

|---|---|---|---|---|---|---|---|

| 0 | quo-1003 | Jonathan | Validate | quo-1003-validate | start | 2017-04-17 14:59:08 | NaN |

| 1 | quo-1004 | Andrea | Validate | quo-1004-validate | start | 2017-06-11 13:10:45 | order-17-56548 |

| 2 | quo-1006 | Katherine | Validate | quo-1006-validate | start | 2017-10-16 15:59:18 | order-17-56550 |

| 3 | quo-1008 | Andrea | Validate | quo-1008-validate | start | 2017-09-09 17:58:39 | NaN |

| 4 | quo-1010 | Andrea | Validate | quo-1010-validate | start | 2017-09-05 17:40:32 | NaN |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 14690 | quo-929 | Katherine | Decline Request For Quotation | quo-929-decline | complete | 2017-04-21 15:32:49 | NaN |

| 14691 | quo-930 | Andrea | Decline Request For Quotation | quo-930-decline | complete | 2017-10-29 18:07:52 | NaN |

| 14692 | quo-969 | Katherine | Decline Request For Quotation | quo-969-decline | complete | 2017-06-03 11:59:36 | NaN |

| 14693 | quo-983 | Katherine | Decline Request For Quotation | quo-983-decline | complete | 2017-01-18 15:42:26 | NaN |

| 14694 | quo-994 | Andrea | Decline Request For Quotation | quo-994-decline | complete | 2017-09-17 17:46:38 | NaN |

14695 rows × 7 columns

order_history = pyreadr.read_r('otc_order_history.RDS')

order_history = order_history[None]

order_history.rename(columns = {'time':'timestamp'}, inplace = True)

order_history

| sales_order_id | action | activity | resource | status | timestamp | activity_cost | quotation_id | |

|---|---|---|---|---|---|---|---|---|

| 0 | order-17-56542 | order-17-56542-0000001 | Receive Sales Order | Katherine | start | 2017-10-17 12:37:22 | NaN | NaN |

| 1 | order-17-56542 | order-17-56542-0000002 | Send To Manufacturing | Jonathan | start | 2017-10-19 15:30:40 | NaN | NaN |

| 2 | order-17-56543 | order-17-56543-0000003 | Receive Sales Order | Andrea | start | 2017-11-04 15:27:23 | NaN | NaN |

| 3 | order-17-56543 | order-17-56543-0000004 | Send To Manufacturing | Jonathan | start | 2017-11-13 15:08:58 | NaN | NaN |

| 4 | order-17-56544 | order-17-56544-0000005 | Receive Sales Order | Andrea | start | 2017-04-08 15:10:53 | NaN | NaN |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 60799 | order-17-59561 | order-17-59561-0060800 | Prepare Invoice | Virginia | complete | 2017-03-09 12:21:34 | NaN | NaN |

| 60800 | order-17-59562 | order-17-59562-0060801 | Prepare Invoice | Virginia | complete | 2017-03-26 19:26:07 | NaN | quo-996 |

| 60801 | order-17-59563 | order-17-59563-0060802 | Prepare Invoice | George | complete | 2017-11-24 18:46:48 | NaN | NaN |

| 60802 | order-17-59564 | order-17-59564-0060803 | Prepare Invoice | George | complete | 2017-03-04 10:30:07 | NaN | NaN |

| 60803 | order-17-59565 | order-17-59565-0060804 | Prepare Invoice | George | complete | 2017-05-10 14:02:24 | NaN | NaN |

60804 rows × 8 columns

otc = pd.concat([sales_history, order_history])

#otc[otc.activity == 'Receive Sales Order']

otc.info()

<class 'pandas.core.frame.DataFrame'>

Int64Index: 75499 entries, 0 to 60803

Data columns (total 9 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 quotation_id 38922 non-null object

1 resource 75499 non-null object

2 activity 75499 non-null object

3 action 75499 non-null object

4 lifecycle 14695 non-null object

5 timestamp 75499 non-null object

6 sales_order_id 71321 non-null object

7 status 60804 non-null category

8 activity_cost 14100 non-null float64

dtypes: category(1), float64(1), object(7)

memory usage: 5.3+ MB

otc_event_log = otc.copy()

otc_event_log["timestamp"] = pd.to_datetime(otc_event_log["timestamp"])

otc_event_log = otc_event_log.sort_values(by=["timestamp"]) # https://aginic.com/blog/your-guide-to-process-mining/

otc_event_log['case_id'] = otc_event_log.quotation_id.fillna('') + '-' + otc_event_log.sales_order_id.fillna('')

#df['new_col'] = df['foodstuff'].fillna('') + df['type'].fillna('')

#otc_event_log['case_id'] = otc_event_log['quotation_id'].combine(otc_event_log['sales_order_id'], lambda a, b: ((a or "") + (b or "")) or None, None)

otc_event_log

| quotation_id | resource | activity | action | lifecycle | timestamp | sales_order_id | status | activity_cost | case_id | |

|---|---|---|---|---|---|---|---|---|---|---|

| 10949 | quo-808 | Katherine | Receive Request For Quotation | quo-808-13875 | complete | 2017-01-01 12:13:56 | order-17-59385 | NaN | NaN | quo-808-order-17-59385 |

| 9936 | quo-3636 | Andrea | Receive Request For Quotation | quo-3636-11915 | complete | 2017-01-01 12:28:35 | order-17-58955 | NaN | NaN | quo-3636-order-17-58955 |

| 11047 | quo-846 | Katherine | Receive Request For Quotation | quo-846-14035 | complete | 2017-01-01 12:37:46 | order-17-59422 | NaN | NaN | quo-846-order-17-59422 |

| 3020 | NaN | Jonathan | Receive Sales Order | order-17-58052-0003021 | NaN | 2017-01-01 13:55:11 | order-17-58052 | start | NaN | -order-17-58052 |

| 4370 | NaN | Jonathan | Receive Sales Order | order-17-58727-0004371 | NaN | 2017-01-01 14:32:18 | order-17-58727 | start | NaN | -order-17-58727 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 47988 | quo-1186 | George | Send Last Reminder | order-17-56717-0047989 | NaN | 2018-04-16 15:08:27 | order-17-56717 | start | NaN | quo-1186-order-17-56717 |

| 50151 | quo-1879 | Virginia | Payment Received | order-17-57350-0050152 | NaN | 2018-04-17 13:09:40 | order-17-57350 | start | NaN | quo-1879-order-17-57350 |

| 47989 | quo-1186 | George | Payment Received | order-17-56717-0047990 | NaN | 2018-04-19 14:00:10 | order-17-56717 | start | NaN | quo-1186-order-17-56717 |

| 30137 | quo-2660 | Tina | Fix Goods | order-17-58065-0030138 | NaN | 2018-04-20 10:12:04 | order-17-58065 | complete | NaN | quo-2660-order-17-58065 |

| 52625 | quo-2660 | George | Payment Received | order-17-58065-0052626 | NaN | 2018-04-22 19:56:13 | order-17-58065 | start | NaN | quo-2660-order-17-58065 |

75499 rows × 10 columns

len(otc_event_log.sales_order_id.unique())

3025

# only slice cases with sales orders

otc_event_log_only_so = otc_event_log[~otc_event_log.sales_order_id.isnull()]

otc_event_log_only_so.sales_order_id.isnull().sum()

0

# pm4py needs for later applied methods caseid column in the first column of the dataframe

# get rid of unused cols

otc_event_log_only_so2 = otc_event_log_only_so.copy().drop(['lifecycle', 'status'], axis=1)

first_column_to_be = otc_event_log_only_so2.pop('sales_order_id')

otc_event_log_only_so2.insert(0, 'sales_order_id', first_column_to_be)

otc_event_log_only_so2.shape

(71321, 8)

#otc_event_log[otc_event_log.activity == 'Receive Request For Quotation']

from pm4py.objects.conversion.log import converter as log_converter

#parameters = {log_converter.Variants.TO_EVENT_LOG.value.Parameters.CASE_ID_KEY: 'sales_order_id'}

#parameters = {log_converter.Variants.TO_EVENT_LOG.value.Parameters.ACTIVITY_KEY: 'activity'}

#parameters = {log_converter.Variants.TO_EVENT_LOG.value.Parameters.TIMESTAMP_KEY: 'timestamp'}

#event_log = log_converter.apply(otc, parameters=parameters, variant=log_converter.Variants.TO_EVENT_LOG)

event_log = pm4py.format_dataframe(otc_event_log_only_so2, case_id='sales_order_id', activity_key='activity', timestamp_key='timestamp')

type(event_log)

pandas.core.frame.DataFrame

start_activities = pm4py.get_start_activities(event_log)

end_activities = pm4py.get_end_activities(event_log)

print("Start activities: {}\nEnd activities: {}".format(start_activities, end_activities))

Start activities: {'Receive Sales Order': 1818, 'Receive Request For Quotation': 1206}

End activities: {'Payment Received': 2564, 'Create Credit Note': 204, 'Send Invoice': 175, 'Submit To Credit Collection': 81}

# process mining

from pm4py.algo.discovery.alpha import algorithm as alpha_miner

from pm4py.algo.discovery.inductive import algorithm as inductive_miner

from pm4py.algo.discovery.heuristics import algorithm as heuristics_miner

from pm4py.algo.discovery.dfg import algorithm as dfg_discovery

# viz

from pm4py.visualization.petrinet import visualizer as pn_visualizer

from pm4py.visualization.process_tree import visualizer as pt_visualizer

from pm4py.visualization.heuristics_net import visualizer as hn_visualizer

from pm4py.visualization.dfg import visualizer as dfg_visualization

# misc

from pm4py.objects.conversion.process_tree import converter as pt_converter

# alpha miner

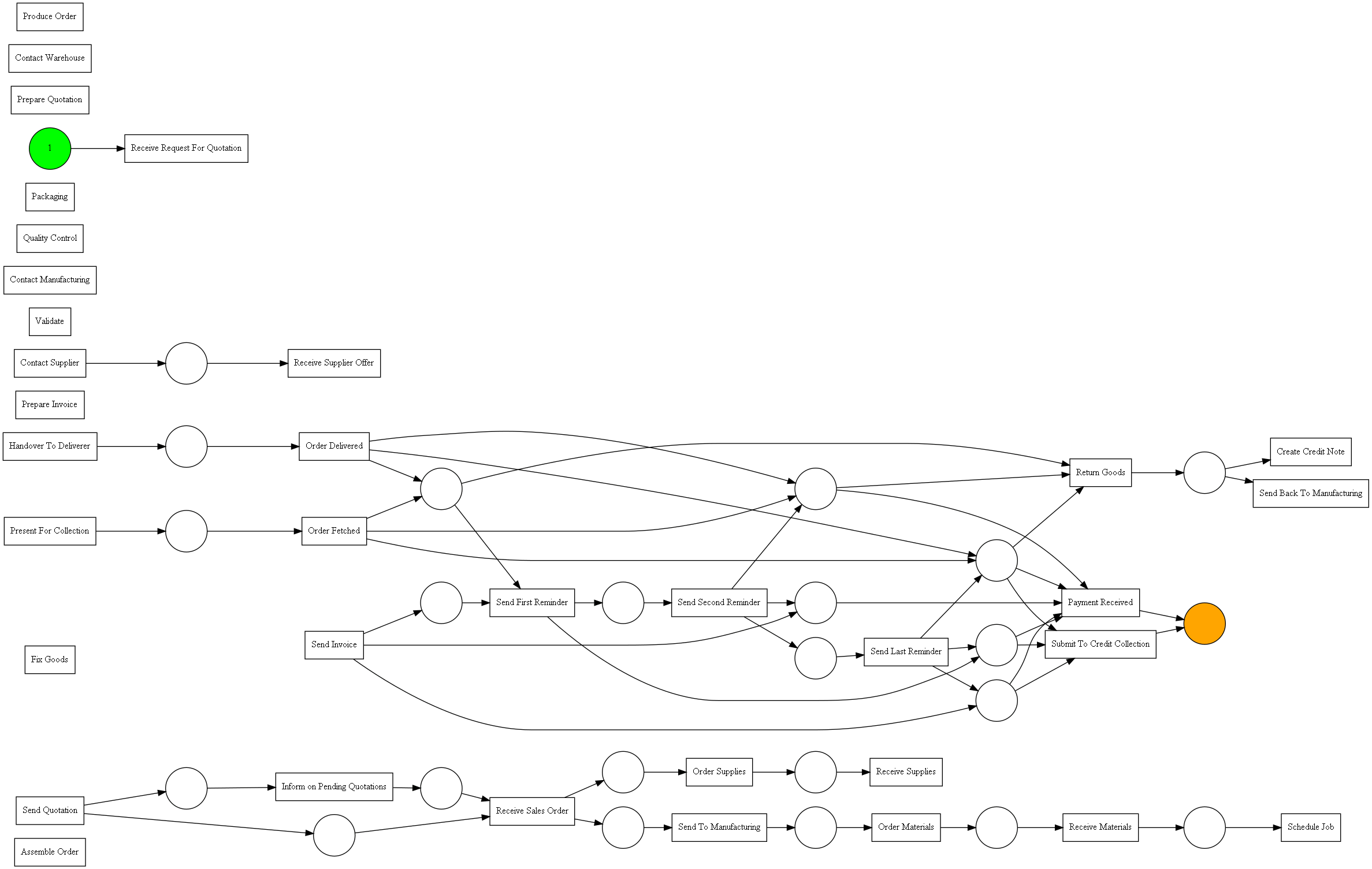

net, initial_marking, final_marking = alpha_miner.apply(event_log)

# Visualise

gviz = pn_visualizer.apply(net, initial_marking, final_marking)

pn_visualizer.view(gviz)

C:\Users\SD\anaconda3\envs\pm4py\lib\site-packages\ipykernel_launcher.py:2: DeprecatedWarning: apply is deprecated as of 2.2.5 and will be removed in 3.0.0. please use pm4py.visualization.petri_net.algorithm instead

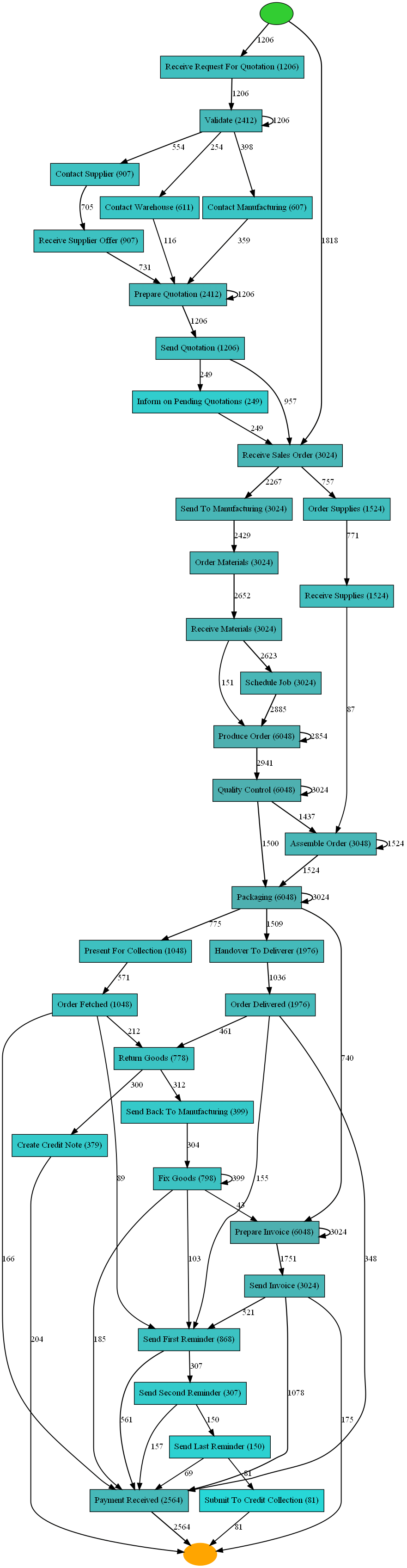

# heuristics miner

heu_net = heuristics_miner.apply_heu(event_log)

# viz

gviz = hn_visualizer.apply(heu_net)

hn_visualizer.view(gviz)

from pm4py.algo.filtering.log.start_activities import start_activities_filter

log_start = start_activities_filter.get_start_activities(event_log)

log_start

{}

type(event_log)

pandas.core.frame.DataFrame

event_log.info()

<class 'pandas.core.frame.DataFrame'>

Int64Index: 71321 entries, 0 to 57779

Data columns (total 13 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 sales_order_id 71321 non-null object

1 quotation_id 34744 non-null object

2 resource 71321 non-null object

3 activity 71321 non-null object

4 action 71321 non-null object

5 timestamp 71321 non-null datetime64[ns]

6 activity_cost 14100 non-null float64

7 case_id 71321 non-null object

8 case:concept:name 71321 non-null string

9 concept:name 71321 non-null string

10 time:timestamp 71321 non-null datetime64[ns]

11 @@index 71321 non-null int64

12 start_timestamp 71321 non-null datetime64[ns]

dtypes: datetime64[ns](3), float64(1), int64(1), object(6), string(2)

memory usage: 7.6+ MB

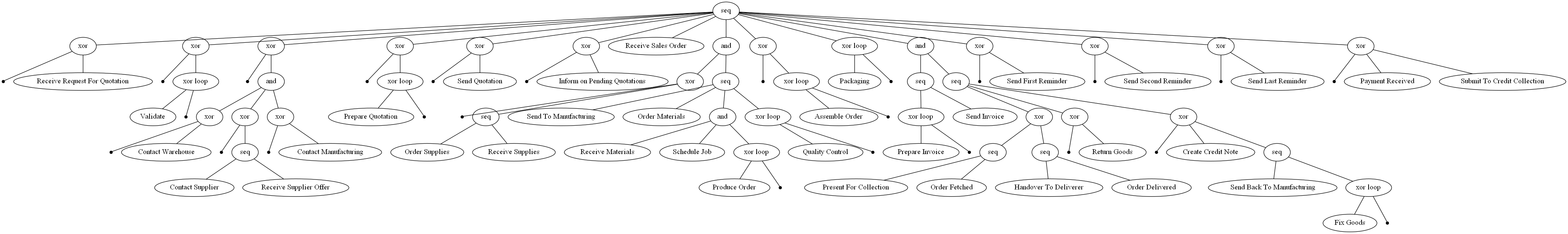

process_tree = pm4py.discover_process_tree_inductive(event_log)

pm4py.view_process_tree(process_tree, #bgcolor='white'

#parameters={'bgcolor': 'white'}

)

otc_event_log_only_so = pm4py.format_dataframe(otc_event_log_only_so.copy(), case_id='sales_order_id', activity_key='activity', timestamp_key='timestamp')

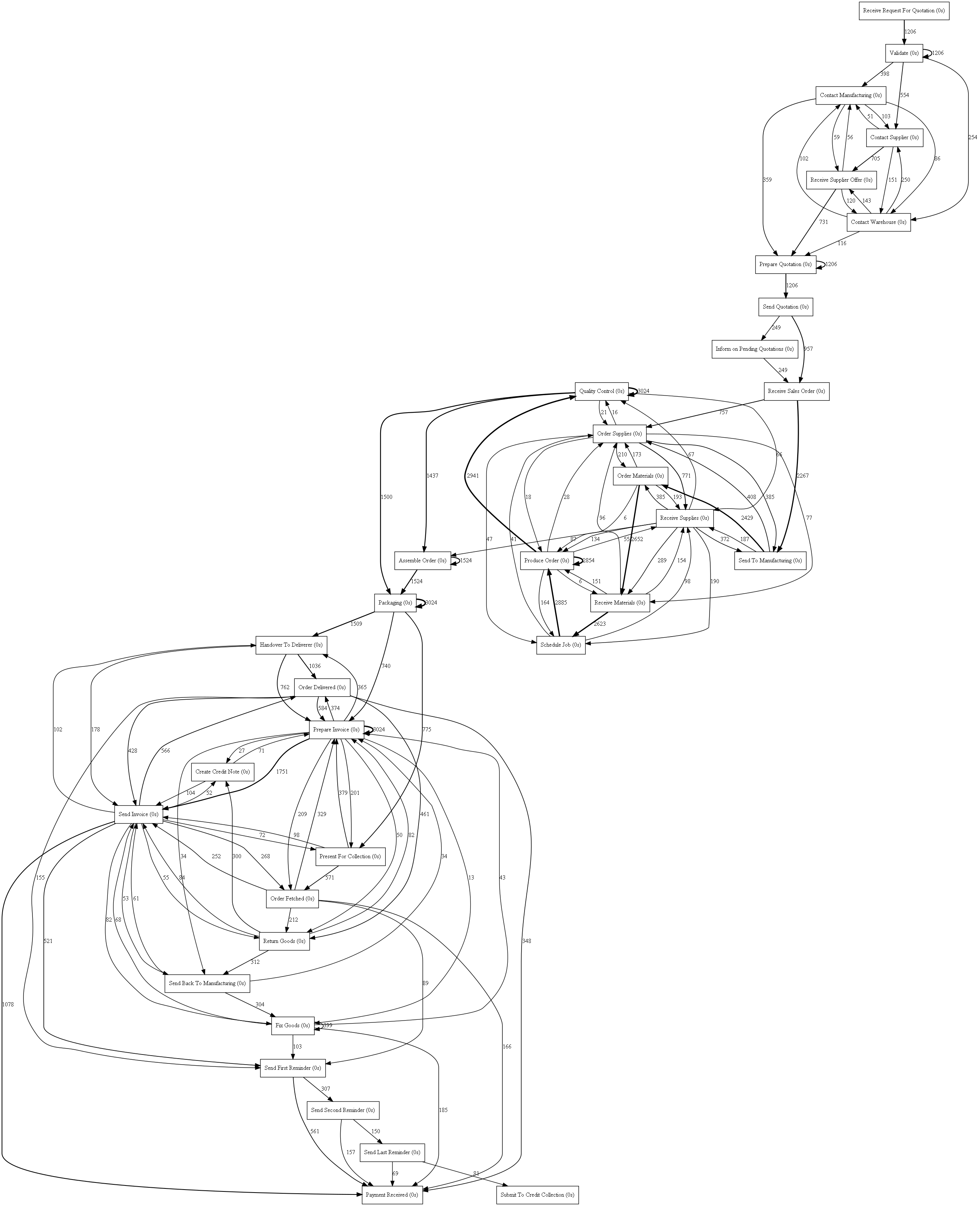

from pm4py.algo.discovery.dfg import algorithm as dfg_discovery

dfg = dfg_discovery.apply(event_log)

from pm4py.visualization.dfg import visualizer as dfg_visualization

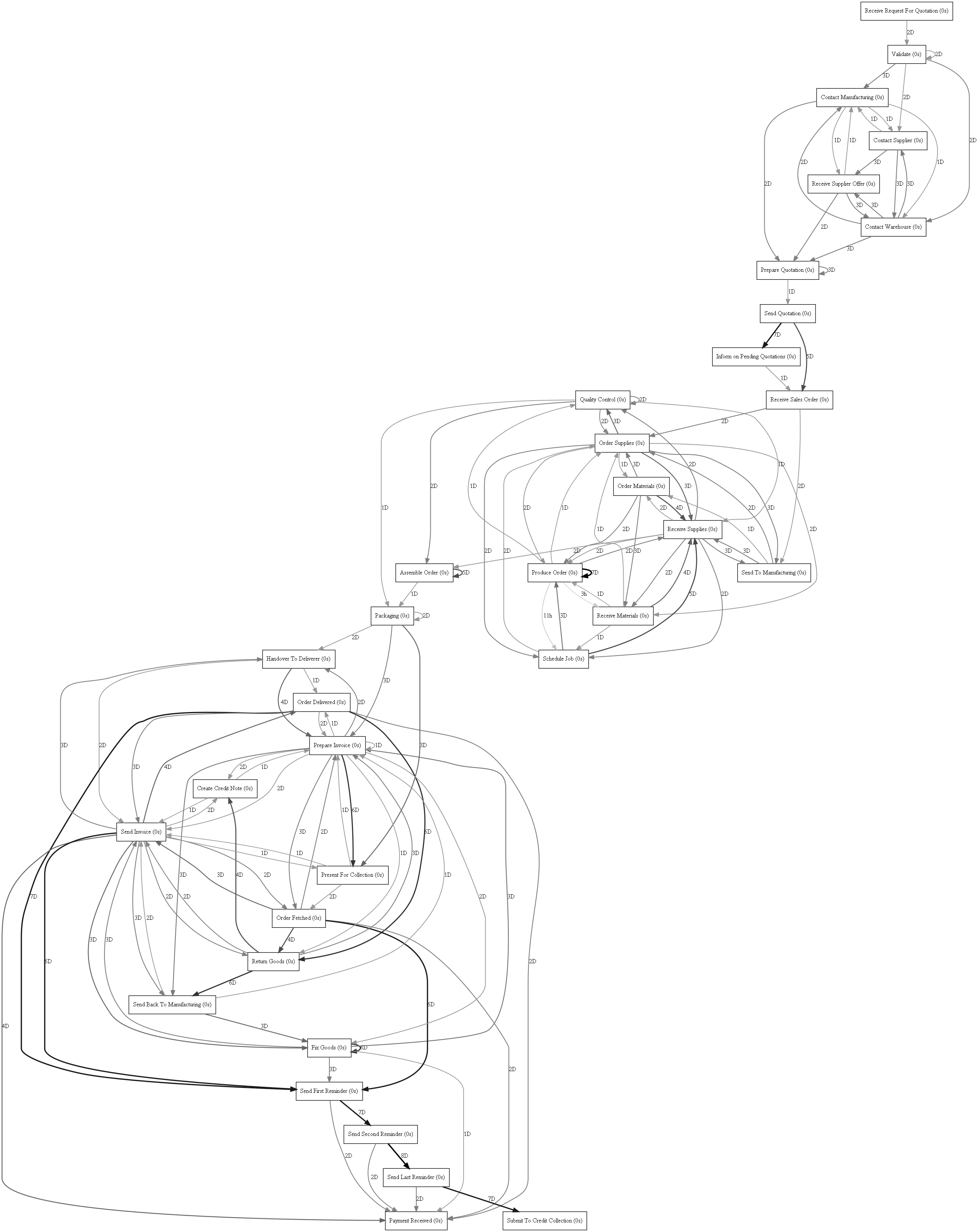

gviz = dfg_visualization.apply(dfg, log=event_log, variant=dfg_visualization.Variants.FREQUENCY)

dfg_visualization.view(gviz)

from pm4py.algo.discovery.dfg import algorithm as dfg_discovery

from pm4py.visualization.dfg import visualizer as dfg_visualization

dfg = dfg_discovery.apply(event_log, variant=dfg_discovery.Variants.PERFORMANCE)

gviz = dfg_visualization.apply(dfg, log=event_log, variant=dfg_visualization.Variants.PERFORMANCE)

dfg_visualization.view(gviz)

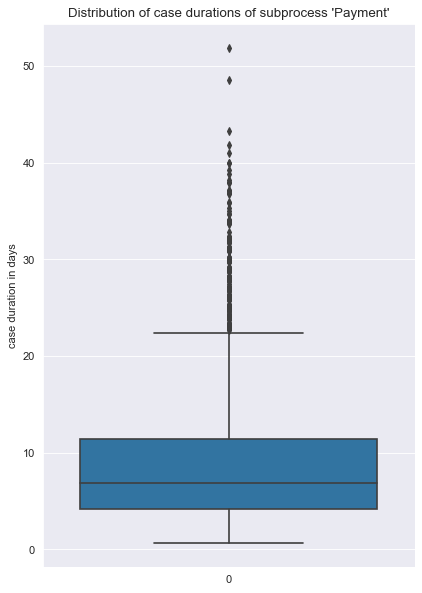

Filtering for subprocess ‘Payment’ (“Send Invoice”, “Payment Received”)

We are interested in the subprocess payment and its cycle time, that may be in focus for a recent performance project and lets see if and how pm4py can help us get insight.

# drop some unneeeded cols

otc_event_log_only_so2 = otc_event_log_only_so.drop(['lifecycle', 'status'], axis=1)

# undocumented(?) pm4py requirement: column sequence in df is important

first_column_to_be = otc_event_log_only_so2.pop('sales_order_id')

otc_event_log_only_so2.insert(0, 'sales_order_id', first_column_to_be)

otc_event_log_only_so2.shape

(71321, 12)

#parameters = {log_converter.Variants.TO_EVENT_LOG.value.Parameters.CASE_ID_KEY: 'case:concept:name'} # identify the case_id_key name (if not change it will simply be the mane of the coloumn)

#event_log_unfiltered = log_converter.apply(otc_event_log_only_so2, parameters=parameters, variant=log_converter.Variants.TO_EVENT_LOG)

#otc_event_log_only_so2.head()

Looking at all activities of order-17-56542:

# before 'between filter'

otc_event_log_only_so2[otc_event_log_only_so2['case:concept:name'] == 'order-17-56542'].sort_values(by=['sales_order_id', 'timestamp'])

| sales_order_id | quotation_id | resource | activity | action | timestamp | activity_cost | case_id | case:concept:name | concept:name | time:timestamp | @@index | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | order-17-56542 | NaN | Katherine | Receive Sales Order | order-17-56542-0000001 | 2017-10-17 12:37:22 | NaN | -order-17-56542 | order-17-56542 | Receive Sales Order | 2017-10-17 12:37:22 | 0 |

| 1 | order-17-56542 | NaN | Jonathan | Send To Manufacturing | order-17-56542-0000002 | 2017-10-19 15:30:40 | NaN | -order-17-56542 | order-17-56542 | Send To Manufacturing | 2017-10-19 15:30:40 | 1 |

| 6048 | order-17-56542 | NaN | Amy | Order Materials | order-17-56542-0006049 | 2017-10-22 14:02:01 | 4344.947965 | -order-17-56542 | order-17-56542 | Order Materials | 2017-10-22 14:02:01 | 6048 |

| 6049 | order-17-56542 | NaN | Julia | Receive Materials | order-17-56542-0006050 | 2017-10-25 11:30:24 | NaN | -order-17-56542 | order-17-56542 | Receive Materials | 2017-10-25 11:30:24 | 6049 |

| 15144 | order-17-56542 | NaN | Gregory | Schedule Job | order-17-56542-0015145 | 2017-10-27 11:32:05 | NaN | -order-17-56542 | order-17-56542 | Schedule Job | 2017-10-27 11:32:05 | 15144 |

| 15145 | order-17-56542 | NaN | Jerry | Produce Order | order-17-56542-0015146 | 2017-11-03 18:34:00 | 25810.513393 | -order-17-56542 | order-17-56542 | Produce Order | 2017-11-03 18:34:00 | 15145 |

| 26139 | order-17-56542 | NaN | Jerry | Produce Order | order-17-56542-0026140 | 2017-11-13 11:09:17 | NaN | -order-17-56542 | order-17-56542 | Produce Order | 2017-11-13 11:09:17 | 26139 |

| 15146 | order-17-56542 | NaN | Mary | Quality Control | order-17-56542-0015147 | 2017-11-15 13:43:24 | 3156.640994 | -order-17-56542 | order-17-56542 | Quality Control | 2017-11-15 13:43:24 | 15146 |

| 26140 | order-17-56542 | NaN | Mary | Quality Control | order-17-56542-0026141 | 2017-11-17 12:47:42 | NaN | -order-17-56542 | order-17-56542 | Quality Control | 2017-11-17 12:47:42 | 26140 |

| 34110 | order-17-56542 | NaN | Phillip | Packaging | order-17-56542-0034111 | 2017-11-19 13:48:13 | 388.394137 | -order-17-56542 | order-17-56542 | Packaging | 2017-11-19 13:48:13 | 34110 |

| 44359 | order-17-56542 | NaN | Phillip | Packaging | order-17-56542-0044360 | 2017-11-22 13:29:27 | NaN | -order-17-56542 | order-17-56542 | Packaging | 2017-11-22 13:29:27 | 44359 |

| 34111 | order-17-56542 | NaN | Phillip | Handover To Deliverer | order-17-56542-0034112 | 2017-11-24 18:18:27 | NaN | -order-17-56542 | order-17-56542 | Handover To Deliverer | 2017-11-24 18:18:27 | 34111 |

| 47383 | order-17-56542 | NaN | Virginia | Prepare Invoice | order-17-56542-0047384 | 2017-11-27 15:50:47 | NaN | -order-17-56542 | order-17-56542 | Prepare Invoice | 2017-11-27 15:50:47 | 47383 |

| 57780 | order-17-56542 | NaN | Virginia | Prepare Invoice | order-17-56542-0057781 | 2017-11-29 12:06:34 | NaN | -order-17-56542 | order-17-56542 | Prepare Invoice | 2017-11-29 12:06:34 | 57780 |

| 47384 | order-17-56542 | NaN | Virginia | Send Invoice | order-17-56542-0047385 | 2017-12-01 09:17:18 | NaN | -order-17-56542 | order-17-56542 | Send Invoice | 2017-12-01 09:17:18 | 47384 |

| 34112 | order-17-56542 | NaN | Stephen | Order Delivered | order-17-56542-0034113 | 2017-12-05 14:38:25 | NaN | -order-17-56542 | order-17-56542 | Order Delivered | 2017-12-05 14:38:25 | 34112 |

| 47385 | order-17-56542 | NaN | Virginia | Payment Received | order-17-56542-0047386 | 2017-12-07 09:43:37 | NaN | -order-17-56542 | order-17-56542 | Payment Received | 2017-12-07 09:43:37 | 47385 |

# filter df

filtered_otc_event_log = pm4py.filter_between(otc_event_log_only_so2, "Send Invoice", "Payment Received")

filtered_otc_event_log.shape

(8122, 12)

After filtering with filter_between only those activities of order-17-56542 remain that belong to the payment subprocess.

# after filter

filtered_otc_event_log = pm4py.filter_between(otc_event_log_only_so2, "Send Invoice", "Payment Received")

filtered_otc_event_log.sort_values(by=['sales_order_id', 'timestamp']).head()

| sales_order_id | quotation_id | resource | activity | action | timestamp | activity_cost | case_id | case:concept:name | concept:name | time:timestamp | @@index | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 47384 | order-17-56542 | NaN | Virginia | Send Invoice | order-17-56542-0047385 | 2017-12-01 09:17:18 | NaN | -order-17-56542 | order-17-56542##@@0 | Send Invoice | 2017-12-01 09:17:18 | 47384 |

| 34112 | order-17-56542 | NaN | Stephen | Order Delivered | order-17-56542-0034113 | 2017-12-05 14:38:25 | NaN | -order-17-56542 | order-17-56542##@@0 | Order Delivered | 2017-12-05 14:38:25 | 34112 |

| 47385 | order-17-56542 | NaN | Virginia | Payment Received | order-17-56542-0047386 | 2017-12-07 09:43:37 | NaN | -order-17-56542 | order-17-56542##@@0 | Payment Received | 2017-12-07 09:43:37 | 47385 |

| 47387 | order-17-56543 | NaN | George | Send Invoice | order-17-56543-0047388 | 2018-01-01 10:18:15 | NaN | -order-17-56543 | order-17-56543##@@0 | Send Invoice | 2018-01-01 10:18:15 | 47387 |

| 34115 | order-17-56543 | NaN | Cheryl | Order Fetched | order-17-56543-0034116 | 2018-01-03 13:45:12 | NaN | -order-17-56543 | order-17-56543##@@0 | Order Fetched | 2018-01-03 13:45:12 | 34115 |

parameters = {log_converter.Variants.TO_EVENT_LOG.value.Parameters.CASE_ID_KEY: 'case:concept:name'} # identify the case_id_key name (if not change it will simply be the mane of the coloumn)

event_log__otc = log_converter.apply(filtered_otc_event_log, parameters=parameters, variant=log_converter.Variants.TO_EVENT_LOG)

event_log__otc

[{'attributes': {'concept:name': 'order-17-56542##@@0'}, 'events': [{'sales_order_id': 'order-17-56542', 'quotation_id': nan, 'resource': 'Virginia', 'activity': 'Send Invoice', 'action': 'order-17-56542-0047385', 'timestamp': Timestamp('2017-12-01 09:17:18'), 'activity_cost': nan, 'case_id': '-order-17-56542', 'concept:name': 'Send Invoice', 'time:timestamp': Timestamp('2017-12-01 09:17:18'), '@@index': 47384}, '..', {'sales_order_id': 'order-17-56542', 'quotation_id': nan, 'resource': 'Virginia', 'activity': 'Payment Received', 'action': 'order-17-56542-0047386', 'timestamp': Timestamp('2017-12-07 09:43:37'), 'activity_cost': nan, 'case_id': '-order-17-56542', 'concept:name': 'Payment Received', 'time:timestamp': Timestamp('2017-12-07 09:43:37'), '@@index': 47385}]}, '....', {'attributes': {'concept:name': 'order-17-59565##@@0'}, 'events': [{'sales_order_id': 'order-17-59565', 'quotation_id': nan, 'resource': 'George', 'activity': 'Send Invoice', 'action': 'order-17-59565-0057776', 'timestamp': Timestamp('2017-05-16 19:34:52'), 'activity_cost': nan, 'case_id': '-order-17-59565', 'concept:name': 'Send Invoice', 'time:timestamp': Timestamp('2017-05-16 19:34:52'), '@@index': 57775}, '..', {'sales_order_id': 'order-17-59565', 'quotation_id': nan, 'resource': 'George', 'activity': 'Payment Received', 'action': 'order-17-59565-0057780', 'timestamp': Timestamp('2017-06-08 12:14:44'), 'activity_cost': nan, 'case_id': '-order-17-59565', 'concept:name': 'Payment Received', 'time:timestamp': Timestamp('2017-06-08 12:14:44'), '@@index': 57779}]}]

from pm4py.statistics.traces.generic.log import case_statistics

all_case_durations = case_statistics.get_all_case_durations(event_log__otc, parameters={case_statistics.Parameters.TIMESTAMP_KEY: "time:timestamp"}

)

all_case_durations_days = pd.Series(all_case_durations)/86400 # days

from matplotlib.pyplot import figure

figure(figsize=(6, 9), dpi=80)

sns.boxplot(data=all_case_durations_days)

plt.title("Distribution of case durations of subprocess 'Payment'")

plt.ylabel("case duration in days")

plt.xlabel("")

plt.show()

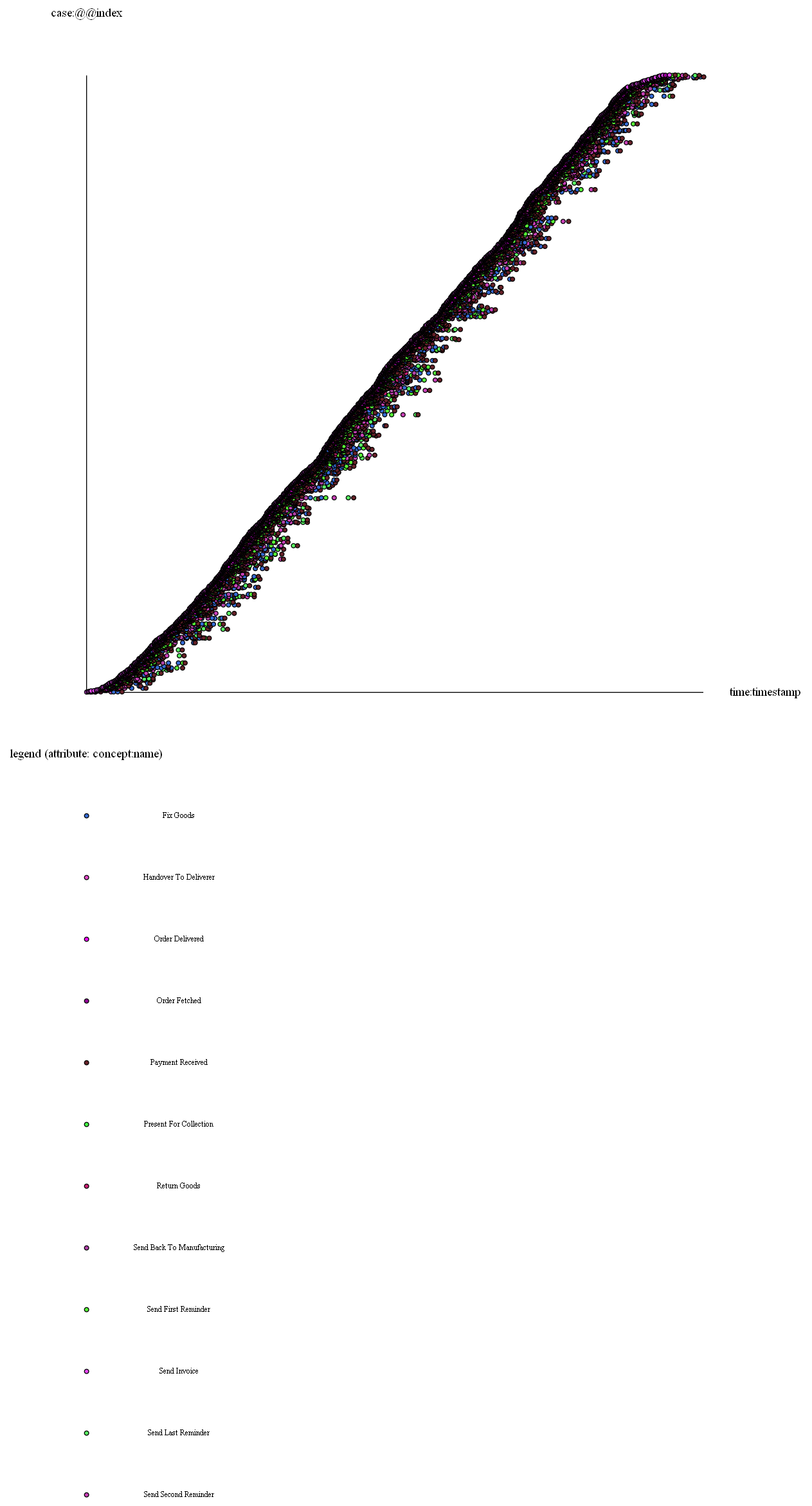

# it seems no title can be parametrized through view_dotted_chart method

pm4py.view_dotted_chart(event_log__otc)

Having only limited time to dig in, I managed to do quite a lot of types of analyses with pm4py, however it is not that widely used and the documentation of its methods are bare bone basic. Often essential requirements in order to make certain methods work are not communicated unfortunately. For example there seems to be this requirement hat the case id column has to be the first column in Logdata DataFrame, so that the between filter actually returns what was intended to be returned. While searching for these kind of oddities certainly sucks, I will follow pm4py progress. Cheers!